Difference between revisions of "User talk:Bossenne"

(→Data Structures) |

(→Software Engineering) |

||

| Line 198: | Line 198: | ||

* Unformatted Fortran90 format data files | * Unformatted Fortran90 format data files | ||

| − | == Software Engineering == | + | ==<font color = darkblue> Software Engineering </font>== |

* Fortran90 with modules | * Fortran90 with modules | ||

* Keyword-based input file | * Keyword-based input file | ||

| − | |||

== Gallery == | == Gallery == | ||

Revision as of 15:32, 12 July 2012

Contents

- 1 Work in progress, come back later ! -- Partie publique

- 2 Partie privée -- User's manual

- 2.1 Parameters

- 2.2 Organization of H-Allegro

- 2.3 Post-treatment tools

- 2.4 Test cases

- 2.5 Profiling and Scalability

- 2.6 Model of calculation

- 2.7 Notes et Références

Work in progress, come back later ! -- Partie publique

Motivation

H-Allegro is a parallel, high accuracy, compressible, reactive, direct Navier Stokes solver (DNS). It is the MPI-parallelized evolution of the former code ALLEGRO. From ALLEGRO, H-Allegro has mainly been developed by two PhD students: Eric Albin and Marianne Sjostrand, and by the PARALGO company.

Equations and Hypotheses

Equations

Hypotheses

The hypotheses to simplify the Navier-Stokes equations are :

- The total energy balance according to Poinsot-Veynante is described below, if :

The term can be ignored with regard to , which is the heat release due to combustion.

This release can be defined by these equations : , where is the specific heat of reaction, is the standard enthalpy of formation and is the reaction rate.

is the power produced by volume forces on species k, considered as non-existent since there are no volume forces.

is the heat source term which is null here since there is no electric spark, laser or radiative flux.

is the mass fraction of the species k.

The tensor is composed of the tensor of constraints and the tensor of pressure :

Thanks to these hypotheses, the total energy balance becomes :

With corresponding to the heat release due to combustion, .

- The term is not used. It usually defines other forces like electromagnetical forces or weight.

- is either a fuel or a combustive source term.

if it is a fuel source term or if it is the oxidant one.

and are respectively the molar mass of fuel and oxidant.

- can be determined by the Arrhenius law :

- The heat flows due to mass fraction gradients and the species molecular distribution due to temperature gradients are neglicted (cf : Soret and Dufour).

- The termal conductivity, is given by :

Where is the Prandtl number :

This number compares the momemtum distribution with the heat distribution.

- The Schmidt number, , is :

- The Lewis number for the species k, , is deducted from the Schmidt and Lewis numbers :

Where is the species diffusion.

We will consider the Lewis number egals to 1 in order to simplify the physics of the pre-mixture flame.

- From the relation of these last three numbers, we can deduct that :

Therefore, the diffusion coefficient can be defined as :

- We use the Fick Law which is a simplified diffusion law :

is the flux of particles density.

C is the particles density.

Thus the diffusion coefficients of the various species are characterized by the number of Lewis.

- We consider that the gas is a perfect, viscous, reagent and diatomic gas. As a consequence of the viscosity, the compressible effects are not dominating, which involves that the bulk viscosity is neglicted.

- The gas is reagent thus the mixture of various species is not isothermal, they must be individually followed. It implies that the calorific capacities depend on the temperature and on the composition.

- For a diatomic gas, the calorific capacities can be defined as follows :

- The gas respects the law of perfect gases :

- The combustion is an irreversible transformation whose creation of entropy is compensated with an entropy given by the system to the outside, because of a thermal transfer, thus we can consider that the transformation is isentropic. Consequently, the law of Laplace for the thermodynamics is applicable :

- According to the power law, the dynamic viscosity depends only on the temperature :

- The tensor of the constraints, by respecting the hypothesis of a Newtonian fluid, is :

The Kronecker symbol, , egals 1 if i=j, 0 else.

- The acoustic Reynolds number is defined such as :

c is the speed of sound : 340 m., L is the characteristic length and is the kinematic viscosity of air : 1.45e-5 m²..

Closure equations

Models

- Mixing : constant Schmidt number

- Chemistry : one step Arrhenius' law

- Viscosity : power law

Numerics

- Spatial : 6th-order finite difference scheme and original staggered scheme developped by E. Albin [1]

- Temporal : 3rd order explicit time integration (Runge-Kutta3) [2]

- Chemical :

- One step chemistry based on an Arrhenius' law [3]

- Partially premixed gas

- Boundary conditions : the 3D-NSCBC processing.

Data Structures

- 1D, 2D, 3D structured solver

- Optimized non-blocking MPI communications

- Homogeneous mesh refinement

- VTK post-treatment possible

- Binary files

- Unformatted Fortran90 format data files

Software Engineering

- Fortran90 with modules

- Keyword-based input file

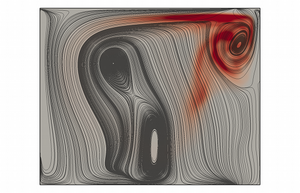

Gallery

Performances

Scalability Test

Télécharger les explications du test.

En réalité dans la partie privée, juste un test !

Partie privée -- User's manual

Parameters

Makefile

The makefile allows to compile routines and subroutines. In order to compile, the command "make" will be typped in the terminal.

The default target of this file is "all : :".

Since various computers can be used, the compilation has to be conditional. That is to say that several configurations of compilation are available in function of the considered cluster (Antares, Jade, ...) :

- FC indicates what is the used compiler. For instance : if the language is fortran90 and MPI, it will be written FC = mpif90.

- A part of the code is written in C, it is about the file Vtk_bin_write.c. So the compiler for the C language has to be defined thanks to CFLAGS.

- FFLAGS defines the optimization options for the Fortran compiler. For instance :

FFLAGS = -O3 -ipo -p.

"O3" is an optimization option of level 3. From this level, semantics of the routine can be modified. The optimization will be more aggressive concerning the memory access and the buckles management. The compilation time will be more important than for a lower level and the code size will be also bigger.

"ipo" is an interprocedural optimization option. It authorizes the inlining of present subroutines in seperated source files.

"p" is a profiling option.

The common files of the executable for calculation and the common files of the executable for postprocessing have to be defined.

For example :

all : : Mezo3D df6_pospro COMMON_OBJS += communs.o COMMON_OBJS += lectus.o

Then Allegro files are compiled and an executable is created :

Mezo3D : $(ALLEGRO_OBJS) $(QUIET_LINK)$(FC) $(FFLAGS) -o $@ $(filter %.o,$ ˆ)

The same thing is done for the postprocessing files :

df6_pospro : $(POSTPRO_OBJS) $(QUIET_LINK)$(FC) $(FFLAGS) -o $@ $(filter %.o,$ˆ)

An option named clean is available. This one allows to erase files with extensions .o, .mod, .d. The executable files, the solution files and the gmon.out files are also deleted.

It is possible to change this option if necessary.

Fort.10

This file is the one where the parameters of the mesh, the chemistry... are set.

GRID

This part is dedicated to the parameters of the mesh.

- Ndim : spatial dimension of the simulation (1 for a 1D simulation, 2 for a 2D one, 3 for a 3D one).

- Nx, Ny, Nz : number of points respectively in the directions , and .

- Npx, Npy, Npz : number of processors allocated to the resolution, respectively in the directions , and . The total number of points in the i direction is , and the total number of processors used for the calculation is .

Then the geometry of the box where the simulation will take place is defined.

- Xmax, Ymax, Zmax : maximal lenghts of the area, respectively in the directions , and .

- IGridx, IGridy, IGridz : 1 if the grid is uniform in the considered direction, 0 otherwise.

- Alphax, Alphy, Alphaz : stretching ratios. (NO MORE AVAILABLE IN THE STAGGERED VERSION OF THE CODE)

- Betax, Betay, Betaz : stretching rates. (NO MORE AVAILABLE IN THE STAGGERED VERSION OF THE CODE)

- XP0, YP0, ZP0 : stretching positions. (NO MORE AVAILABLE IN THE STAGGERED VERSION OF THE CODE)

Finally, the kind of boundary conditions is defined with IType. 3 choices are given :

- Periodic (0),

- General (1),

- Symmetrical (2).

(NO MORE AVAILABLE IN THE STAGGERED VERSION OF THE CODE)

NSCBC

NSCBC means Navier-Stokes Characteristic Boundary Conditions. With these boundary conditions, the different types of input and output are taken into account. They have to be defined on each face of the box.

- Periodic (0)

- Non-reflecting outflow (1)

- Non-reflecting inflow (2)

- Hard inflow (3)

- Wall (4)

PARAM

The needed constants are defined here.

- Nsc : number of scalar, defined by the equations. For example, Nsc = 3 if the scalars are , and .

Remark: If we considere a reactive case, Nsc=1 will refer to the fuel, Nsc=2 will refer to the oxydizer.

- Re_ac : acoustic Reynolds of the flow.

c : sound speed ; L : characteristic length. At this stage the user should be clear in which unity he defines his geometry (1 mm, 1 cm ?), the density and the viscosity of the fluid at the reference temperature, as well as the sound speed. exemple: </math> c=340 m/s </math>, </math>L=1mm=0.001 m</math>, </math>\rho=1,2 kg/m^{3}</math>, </math>\mu=1,85. 10^{-5} kg/m/s</math> lead to

- Gamma : ratio between heat capacity at constant pressure and heat capacity at constant volume.

- Pr : Prandtl number of the flow.

Where is thermal difffusivity ratio.

- Sc : Schmidt number of each species. Species 1 is the fuel, species 2 air and species 3 a neutral one.

REACTION

In this part, the parameters affecting the chemistry are defined.

- I_react : if 1, the chemistry will be resolve, if 0, it won't.

- CFL_react : CFL (Courant-Friedrichs-Lewy condition) of the reaction.

- Alpha : thermal expansion ratio.

: temperature of the burnt gases ; : temperature of the unburnt gases.

- Beta : Zeldovitch number.

: temperature of the activation barrier so that the reaction can happen.

- Damla_factor : Damköhler factor which defines the operational conditions of a reaction.

- NuF*MF and NuO*MO : stoichiometric coefficient respectively for the fuel and the oxidant.

M : molar mass of the species.

- YF_O and YO_O : Mass fraction of fuel and oxidizer the reference flows of fuel and of oxydizer.

Exemple: Air and fuel are injected separately: so YF_O=1 and YO_O=0.23 .

FILES

In this section, the back-up files are managed (name, creation frequency...). These files are storing raw data.

- PREFIX : prefix of the filenames.

- I_fresh_run : if 1, a new calculation is started. If 0, the code restarts a calculation at a specified point.

- I_read_unit : the code will continue the calculations starting from the file with this number.

- I_save_unit : number of the first file while calculating.

- I_print : number of iterations that have to be done to print a message.

- CFL_no : settles the equation CFL.

- I_iter_save : number of iterations that have to be done to create a data file.

- Time_save : time to save the elapsed time.

- N_iter_stop : maximal number of iterations to stop the calculation.

- Time_end : maximal time to stop the calculation.

POST

This section deals with the post-treatment parameters.

- PREFIXpost : prefix of the files that will be subjected to the post-treatment.

- Ichoice : format of the output data. 1 to use Paraview, 2 to use Gnuplot.

- Xstart : first read data file that will be post-treated.

- Xend : last read data file.

- Xinc : increment to jump files.

- Ystart : first output file to be written.

At this stage some cleaning of the code would be useful for futur users.

- NormDir : plane normal direction (1,2,3). (WARNING in all_post NormDir may be read as REDIM in all_post.f90, it is a bug that hasn't been fixed yet, the best is to let all the following value at 0)

- PlaneCoord : position of the plane on XP2(NormDir), between 1 and for the direction i.

- REDIM : if 0, the results will be adimensional, if 1 they will be dimensional.

- Lo* : reference length (1m).

- Co* : reference sound speed (1m/s).

- Rho_o* : reference density ().

- To* : reference temperature (1K).

SCALAR This part is made to let the user customize the code, by entering parameter that he could refer to using the subroutine LECTUS with the tag scalar as it is made for exemple in the file all_post.f90. The value of the scalars are given here.

- Uo : speed of the flow.

- Cv : heat capacity at constant volume.

- Rv, Xo, Yo : parameters for particular cases.

Fort.10.sh

Fort.10.sh is a file written in shell language in purpose to ease the creation of initial directories for the submission of new jobs.

As it has been first thought, it works with the file launch-run.sh. In launch-run.sh the variables NX, NY, NZ (that define the mesh size per processor) and the variables NPX, NPY, NPZ (that define the number of processors in each direction) are defined to be exported in fort.10.sh. In this way, several calculations can be launched by changing only a few parameters.

Initialization example :

sh launch-run.sh -g

The option -g calls the subroutine generate(). This subroutine creates a directory named by the variable "dir". In this directory, two other directories will be created. A directory "IN" will contain the input files while a directory "OUT" will contain the output files. Then the template of the executable "NAME" is redirected towards a file named fort.10. The file fort.10 will be located in the directory "IN". This step is realized like this :

sh $Name.template > $dir/IN/fort.10.

When the file fort.10 is created, the subroutine copies the executable calculating the case, the executable dealing the results as well as the file fort.11 in the directory "IN". Then the template of the job is redirected towards the file named "job".

Vtk_bin_write

This file concerns the writting of output files on several processors. The users may have to change flags in the file depending on the cluster he wish to use. Two configurations are available and cover all the clusters used with the code. For exemple: it is possible to launch calculations either on ATON, or on VARGAS. In that case, it is necessary to put the value 1 for the computer which is going to be used. For instance, if the used computer is ATON, then the value entered will be 1 and so, it will be 0 for the computer VARGAS :

#define ATON 1 #define VARGAS 0

Otherwise, if the used computer is VARGAS, then the value entered will be 1 and it will be 0 for the computer ATON :

#define ATON 0 #define VARGAS 1

Be careful, some options will be possibly modified in function of the cluster.

The distribution of processors can be defined in function of :

- Coordinates.

- Vectors : in that case it will depend on the dimension of the problem, NDIM.

- Scalar : this function will define the id number of the processor.

Job

The job file is used if the calculation of the problem is made on a computer which is not local.

The extension of this file is .ll because it is a LoadLeveler file.

A job for Antares has to be created like this :

- Working name : locating more easily the calculations.

@ job_name = $NAME-$dir

- Standard exit file :

@ output = \$(job_name).o\$(jobid)

- Error exit file :

@ error = \$(job_name).e\$(jobid)

- Working type :

@ job_type = MPICH

- Total number of wanted processus :

@ total_tasks = $NP

- Maximal elapsed time for the job :

@ wall_clock_limit = 24 :00 :00

- Maximal memory by MPI process : if a process exceeds the specified limit, then the calculation is killed. Conversely, if this parameter is overestimated, the waiting time will be longer. It is preferable to execute calculations with a data limit subordinate or equal in 3gb. The really necessary memory by process has to get closer as much as possible to the wanted memory.

@ data_limit = 550mb

- Initial directory to send : this directory contains all the data for the calculation.

@ cri_initialdir = $PWD/$dir/IN

- Final directory for the results : the computer has to send the results to this directory.

@ cri_finaldir = $PWD/$dir/OUT

- User mail : an e-mail will be send in case of overtaking of elapsed time.

@ notify_user = name@adress.fr

- Buffering working directory :

cd $LOCAL_WORK_DIR

The arborescence of the file must be defined below this line. Generally, it corresponds to the arborescence of the final directory.

The result files are moved in a directory named Spool before being copied in the final directory.

Launch-run.sh

This file is a tool and is not essential to run the code, but can save a lot of time in case of a parameters study. For exemple it may be interesting to have a way to change the parameters automatically in order to reduce the possibility of mistakes.

This file automates the changes to be done on the quantities of mesh points or processors in each direction. Instead of giving the value for Nx, Ny, Nz, Npx, Npy and Npz in the control file, a new file (template) is created from the original one. In this template, the value are replaced by a notation : in the direction i, the number of points is $NI and the number of processors is $NPI. The whole text is inserted in the command echo" ". The beginning of the file is shown below.

echo" ==================================================== ALLEGRO1-2-3D CONTROL FILE ==================================================== # GRID # $NDIM Ndim $NX Nx $NY Ny $NZ Nz $NPX Npx $NPY Npy $NPZ Npz ... "

The value that will be used are then written in the launch-run.sh. This file has to begin with #!/bin/sh in order to be executable for the shell. Each case of calculation is written on a sole line.

#!/bin/sh NDIM=3 NX=40 NY=40 NZ=40 NPX=1 NPY=1 NPZ=1 ./fort.10.sh $@ NDIM=3 NX=40 NY=40 NZ=40 NPX=2 NPY=1 NPZ=1 ./fort.10.sh $@

The template will pass on the data to the fort.10.sh, which in turn will create the fort.10 file according the value given by the launch_run.sh.

In this example, the first calculation is in 3D, uses a 40x40x40 mesh and works on one processor. The second one is also in 3D, but the size of the mesh is 80x40x40 and works on 2 processors.

When doing a list of cases, there are 2 possibilities to run it :

- the code runs once each case one after the other (as shown above)

- or the code runs only one case by one. In this case, only one line is not a comment, and the calculation has to be launched manually after every change.

Organization of H-Allegro

The routines and subroutines used by the H-Allegro solver are presented below. They are shared out among 5 folders, namely :

- DER

- GRID

- POST

- SOLV

- UTIL

Since the code uses various grids, it is necessary to define the changes that operations are doing. In other words, the differentiations or the interpolations include a grid change, so they have to be defined for each grid. The index 1 in the names of the subroutines stands for a change from the scalar grid to a momentum component one (called ), while the index 2 stands for the opposite change, going from the momentum component grid () to the scalar one (S).

For example, if the derivative of (a scalar) is wanted on the scalar grid, there are two possibilities to proceed :

- the scalar is differentiated with Diff1 (which changes the grid from S to ) and then interpolated with Interp2 (changes the grid from to S).

- the scalar is interpolated with Interp1 (changes the grid from S to ) and then differentiated with Diff2 (changes the grid from to S).

In both cases, will be obtained on the S grid as wanted.

DER/DF6

The folder PADE is not described because the Pade scheme is not used in the MPI version of H-Allegro.

The number at the end of the names indicates that the calculation includes a change of grid. 1 means going from the S grid to the one, while 2 means the contrary, going from the grid to the S one.

- der.f90 : the subroutines to do the various interpolations and differentiations.

- INIT_DER : initialization.

- DERIVATIVE1, DERIVATIVE2: derivative of a function along the chosen axis.

- INTERPOLATE1, INTERPOLATE2 : interpolation of a function along the chosen axis.

- INTERP_SURF1, INTERP_SURF2 : interpolation of a function along the axis Iaxe, on a face with a normal Iface (Iface is perpendicular to Iaxe).

- INTERP_LINE1, INTERP_LINE2 : interpolation of a function along the axis Iaxe, on a line with a normal Iface (Iface is perpendicular to Iaxe).

- der_sub.f90 : the subroutines to do the differentiations and interpolations, taking the boundary conditions into account.

- echange : exchanges data with the nearby processors.

- echange_line : exchanges data with the processors on another line.

- echange_surf : exchanges data with the processors on another surface.

- derivative1_on_slice, derivative2_on_slice : differentiation when the boundary conditions are symmetric.

- derivative1_periodic_on_left, derivative2_periodic_on_left : differentiation when the boundary conditions are periodic on the left.

- derivative1_periodic_on_right, derivative2_periodic_on_right : differentiation when the boundary conditions are periodic on the right.

- derivative1_general_on_left, derivative2_general_on_left : differentiation when the boundary conditions are general on the left.

- derivative1_general_on_right, derivative2_general_on_right : differentiation when the boundary conditions are general on the right.

- interpolate1_on_slice, interpolate2_on_slice : interpolation when the boundary conditions are symmetric.

- interpolate1_periodic_on_left, interpolate2_periodic_on_left : interpolation when the boundary conditions are periodic on the left.

- interpolate1_periodic_on_right, interpolate2_periodic_on_right : interpolation when the boundary conditions are periodic on the right.

- interpolate1_general_on_left, interpolate2_general_on_left : interpolation when the boundary conditions are general on the left.

- interpolate1_general_on_right, interpolate2_general_on_right : interpolation when the boundary conditions are general on the right.

GRID

- all_dimg.f90 : initialization of the dimensions and the various variables.

- grid_bounds.f90 : manages the reading of the domain faces on the different grids.

- INIT_BOUNDS : determines the presence of faces, edges and corners NSCBC3D.

- COPY_FACE_from_S, COPY_FACE_from_Vi, COPY_FACE_from_Vij : copies faces according the grid.

- SUB_COPY_FACE : algorithm to copy the data of a face.

- PASTE_FACE_to_S, PASTE_FACE_to_Vi : pastes according the grid.

- SUB_PASTE_FACE : algorithm to paste the data of a face.

- ADD_FACE_to_S, ADD_FACE_to_Vi : adds a face according the grid.

- SUB_ADD_FACE : algorithm to add a face.

- grid_dim.f90 : creation of the grid.

- LECTUS_GRID : initializes the grid with the parameters, the number of points and processors along each directions, checks that the indicated number of processors is correct.

- GETINDEX : indexes the points.

- grid.f90 : initialization of the grid.

- INIT_GRID : initializes the grid, taking into account the overlaps and calculates the size of a cell.

- plot.f90 : prepares the use of Gnuplot.

- SAVE_GNUPLOT : creates files with the coordinates of the point and the corresponding values.

- SAVE_GNUPLOT_FIELDS : creates files with the fields at different times.

- SAVE_GNUPLOT_1D : does the same work as SAVE_GNUPLOT for 1D simulation.

- post_plot.f90 : creates files for the post-processing.

- PARAVIEW_BINARY : manages the creation of files according the number of dimensions for Paraview.

- OUT_GNUPLOT : manages the creation of files according the number of dimensions for Gnuplot.

- vtk_bin_write.c : manages the distribution of processors and the choice of the calculator.

POST

- all_post.f90 : manages the post-processing, with the initialization and the creation of files.

- LECTUS_POST : reads the data.

- INIT : initialization of the variables on the various grids.

- GETVECT : resizing or not.

- ALLOCATE_FIELDS : allocation of the fields according to the names.

- COMPUTE_FIELDS : resizes if needed and calculates the fields.

- READ_FILES : depending on the rank of the processor, opens a new file and reads in another one the time and the values of the scalars.

- ROTATIONNEL : calculates the curl along the number of dimensions.

- PROJECT : projection of a vector component onto the S grid.

- DILUTION : calculation of the dilution (the thermal diffusivity ratio varies according to the space).

- all_post_cut.f90 : all_post_f90 without some subroutines (GETVECT, ALLOCATE_FIELDS, COMPUTE_FIELDS, ROTATIONNEL, PROJECT and DILUTION), but has another one.

- SAVE_T : creates files keeping the values of the temperature.

SOLV

- all_chemistry.f90 : manages the chemistry of the code.

- LECTUS_REACT : initializes the parameters of the reaction.

- ARRHENIUS : calculates Arrhenius law ().

- ENERGY_SOURCE : calculates the RHS of the energy source term.

- SCALAR_SOURCE : calculates the RHS of the scalar source term.

- REACTION_RATE : calculates the reaction rate.

- CHECK_CFL_REACT : calculates the time-step of the reaction.

- FLAME_SPEED : calculates the propagation speed of the flame.

- all_solver.f90 : resolution of the case.

- NEGATIVE : spotting of the bugs.

- CONVECTION1 : calculates the convection, the result is on the S grid.

- CONVECTION2 : calculates the convection, the result is on the grid.

- CONTINUITE : calculates (continuity equation) on the S grid.

- ENERGY : calculates (conservation of energy) on the S grid.

- MOMENTUM : calculates (conservation of momentum) and the stress tensor .

- SCALAR : calculates the scalars and the diffusion terms.

- RHD_INIT : initializes the variables (speed, pressure, temperature...).

- RHD : calculation of the variables (energy, momentum...), the reaction rate and the boundary conditions.

- TIME_STEPPING : calculates the time advancement (solver Runge-Kutta, order 3).

- CHECK_CFL : calculates the time-step.

- all_dim.f90 : declares the dimensions of the functions send_left, recv_left...

- all_equ.f90 : calculates some variables.

- RHOETHERM : calculates .

- SOUND : calculates the sound speed .

- TEMP_PRES : calculates the pressure and the temperature.

- ENTROPIE : calculates the entropy.

- VISCOSITY : calculates the viscosity.

- all_nscbc.f90 : manages the boundary conditions.

- LECTUS_NSCBC : allocates the type of boundary conditions to each face.

- INIT_BC : initialization and allocation of tables for the faces.

- COPY_BOUNDS_S, COPY_BOUNDS_Vi, COPY_BOUNDS_Vij : copy of a value in the chosen grid on the faces, edges and corners of the S grid.

- OUTFLOW_3D : correctives terms for the DQM to the faces (correction of normal convection terms and viscosity terms).

- SOFT_INFLOW_3D : change of the DQM on a face (adding the transverse terms of convection).

- HARD_INFLOW_3D : change of the DQM on a face (values of the speed and energy forced, calculation of the transverse terms, viscosity and convection terms).

- NOSLIP_WALL_3D : change of the DQM on a face (transverse and normal values of forced to 0, calculation of the transverse terms, viscosity and convection terms and ).

- FACE_PERIODIC : change of the DQM (correction of the normal convection terms).

- NSCBC : implementation of the changes for the faces.

- all_param.f90 : reading of some parameters and calculations.

- LECTUS_PARAM : reading of the parameters (Sc, , , Pr) and calculation of , the atmospheric pressure and the perfect gas constant.

- LECTUS_FILES : reading of the parameters for the output and the time-stepping.

UTIL

- lectus.f90

- LECTUS_MARK : reads and compares marks.

- minmax.f90 : search of minimum and maximum.

- GETMIN : finds the minimum of a function and the corresponding point.

- GETMAX : finds the maximum of a function and the corresponding point.

- GETERRMAX : finds the maximal error.

- GETMINMAX : finds the maximum and the minimum of a function and the corresponding points.

- mtfort.f90 : generates pseudorandom real numbers.

- random.f90 : generates various pseudorandom numbers.

- thi.f90 : generates an Isotropic Homogeneous Turbulence (THI) for a colocated grid.

- LECTUS_PREFIX : reads the prefix of the files.

- LECTUS_THI : reads the parameters used for the THI.

- ADD_BOUNDS2D : adds boundaries in 2D.

- ADD_BOUNDS3D : adds boundaries in 3D.

- SAVE_FIELDS : creates files with the values of the fields.

Post-treatment tools

Test cases

Poiseuille

Profiling and Scalability

Optimum size of blocs

In order to avoid the loss of time, it is advised to use the optimum size of blocs and the optimum cores number, which depend on the studied case as well as the cluster.

The optimum size of blocs is determined with the help of the reduced efficiency :

is the execution time for and a total number of nodes .

The values are plotted against the total number of nodes per core. This curve is supposed to have a plateau corresponding to the optimum size of the blocs. If it is not the case, the test has to be done again with smaller sizes of blocs. Once the optimum size of blocs found, it will be used in the following tests and calculations.

To be more precise, the Weak Scaling method is used to compare the evolutions of speed-up and parallel efficiency according the cores number. The configuration with less difference with the theory will be favoured.

The optimum cores number is then defined for the chosen configuration using the evolution of the speed-up : when the real curve stops following the theorical one, the optimum number has been exceeded.

Scalability

Principle

When a code works on many processors, the calculation domain is divided and the code may lose performances because of the communications between the processors. The scalability test [4] checks the behaviour of the code when the number of cores increases, especially that the performances are quite stable.

Two tests are possible :

- Weak Scaling, the work load per core remains constant. This means that when the number of cores increases, the size of the cells decreases.

- Strong Scaling, the size of the problem remains constant whatever the number of cores working. The work load decreases when the number of cores increases.

Some conditions have to be followed when testing the code. The maximal number of cores is defined by the biggest planed configuration. From this, a number of cores for the reference execution can be specified :

Moreover, it is advised to use up to 3 intermediate values in order to visualize the evolution of the speed-up and the parallel efficiency.

- Weak Scaling :

- Speed-up

- Parallel efficiency

- Speed-up

- Strong Scaling :

- Speed-up

- Parallel efficiency

- Speed-up

Since the goal of this test is also to find out the maximal number of cores used without loss of perfomances, it is not necessary to run the code with a high number of iterations for the calculation. Actually, 10 iterations are enough to test.

Execution time

In order to determine the speed-up and the parallel acceleration, the execution time is required. The box below shows the way to have the execution time for the first processor, which orders the others, printed in a new file.

character*13:: FILEMPI real(8) :: cpu_time cpu_time = MPI_WTime() # Time Stepping temps_ex = MPI_Wtime() - cpu_time if(rang_dans_monde.EQ.0) then FILEMPI='infotemps.txt' open(unit=1234567,file=FILEMPI,form='formatted',status='replace') write(1234567,*) temps_ex close(unit=1234567,status='keep') endif # End

Profiling

Profiling is a way to optimize the code skill. It consists in the determination of the parts which use most resources.

Thanks to the command "gprof", it is possible to create a report of execution. The executable which calculates the case must be compilated with the option -pg, then it must be executed in order to obtain the report, gmon.out.

The file gmon.out is divided into 2 parts :

- Call-graph profile : list of the routines in decreasing order of the CPU consummate time as well as the routines which are called from them.

- Flat profile : list of the routines in the decreasing order of the CPU consummate time and the number of calls.

Analysis of the flat profile

Description

- % time : percentage of total CPU time consumed by the routine.

- Cumulative seconds : partial sum of CPU time.

- Self seconds : CPU time of a routine.

- Calls : number of calls of a routine.

- Self ms/call : average of CPU time consumed by call to the routine, exclusive time.

- Total ms/call : total time (exclusive + inclusive) consumed by call to the routine.

Analysis

Having to test a calculation on various combinations of processors, it seems clearly that the most consumer routines are :

- der_sub_MOD_interpolate1_on_slice

- der_sub_MOD_derivative2_on_slice

- all_solver_MOD_momentum

- der_sub_MOD_interpolate2_on_slice

For instance for a calculation on 4x1x1 processors, the flat profile will be the following :

figure

It indicates that the subroutine derivative2 on slice represents 20 % of total CPU time consumed. Thanks to the cumulative seconds, we can deduce that the total CPU time consumed egals 0.25 second. The number of seconds represented by the subroutine derivative2 on slice is about 0.05 second. This subroutine is invoked 545 times. We can say that the average number of milliseconds spent in this subroutine and its children per call is 0.09 ms.

Remark : If the calculation is run on 2x2x1 instead of 4x1x1, the number of calls of the subroutines derivative and interpolate periodic are divided by 2, while the number of calls of the subroutines derivative and interpolate on slice increases.

Analysis of the call-graph profile

This part is very interesting because it allows to know which routine calls another one and how long it spends. The routines are indexed by decreasing order of CPU time comsumption.

This report details the percentage of the total time that was spent in a function and its children. The total of this percentages is not equal to 100 %. For instance the % run time for the main egals 100 % because it is a routine which takes a lot of time, but the routine solving the time stepping has a run time of 97.3%. This report gives also the amount of time that was propagated from the function into the parent (self), the amount of time that was propagated from the children into the function (children), the number of calls from a routine to another.

Figure

For instance, if we consider the subroutine der_sub_MOD_echange [5], we can see that the parent functions are der_MOD_interpolate2 [16], der_MOD_derivative1 [15], der_MOD_derivative2 [11], der_MOD_interpolate1 [8]. This subroutine is called 1014 times by the routines der_MOD_interpolate2 (93 calls), der_MOD_derivative1 (174 calls), der_MOD_derivative2 (327 calls) and der_MOD_interpolate1 (420 calls). The time spent in this function is about 0.01 second.

The children functions of this subroutine are der_sub_MOD_interpolate1_on_slice [7], der_sub_MOD_derivative2_on_slice [9], der_sub_MOD_derivative1_on_slice [17], der_sub_MOD_interpolate2_on_slice [18], der_sub_MOD_interpolate1_periodic_on_right [32] and so on.

The total amount of time propagated into this subroutine by its children is 0.13 second.

We can see that the subroutine echange calls 545 times the subroutine derivative2 on slice. This last one is called only by the subroutine echange since it is indicated 545/545.

The subroutine echange spends 56 % of the total time in run time.

Optimization of communication time

The run time consists of the calculation time and the communication time. Profiling aims also to minimize this communication time which can be decomposed in the time of latency, of additional cost and of transfer.

The additional cost is the time of preparation of a message.

How to optimize the communication time ?

The first step is to cover the communications by the calculations.

Then, it is necessary to avoid copying out messages in buffering memory spaces and to minimize additional costs led by the repetitive calls to the subroutines of communication.

Finally, it is better to send an only big message rather than several small messages.

How to know the execution time of a routine ?

This information is given by the subroutine of MPI communication : MPI_WTIME().

This subroutine informs the user of the run time as it is called. Therefore, if this subroutine is called at the beginning and at the end of a routine, the run time can be deducted.

What way is it preferable to use for the sending and the reception of messages ?

Standard sending : If the MPI makes a buffering copy of the message then the sending ends when this one is ended. Else, the sending ends when the reception of the message is ended.

Blocking synchronous sending : This sending way uses the subroutines MPI_SSEND() and MPI_RECV().

The blocking synchronous sending allows to avoid buffering copies of messages, in this way it also avoids additional costs.

Thanks to it, a factor 2 is gained with regard to the standard mode.

Non-blocking synchronous sending : This way uses the subroutines MPI_ISSEND() and MPI_IRECV().

The non-blocking way allows to cover the communications by the calculations. The sending of a message ends when the reception was posted and when the reading of the message is ended.

The sending is coupled with the reception thus a factor 3 is gained with regard to the standard sending.

So that this mode can work correctly, it is necessary to add MPI_WAIT() after MPI_ISSEND() and MPI_IRECV(). It allows to synchronize the process till the end of the request.

Model of calculation

Time discretization

A third order Runge-Kutta method is used. This one allows to improve the precision of time integration and the stability.

The equations used in H-Allegro are defined below :

is the matrix of gross conservative data at the ith step and at the time t.

is the same matrix as before at the time .

and are Np x Nvar matrix, with Np, number of points and Nvar, number of variables. Those matrix store the conservative raw variables.

Spatial discretization

Grid arrangements

H-Allegro uses a hybrid-colocated-staggered grid which allows a good accuracy and robustness of the code, while having an unambiguous definition of the boundary conditions.

For example, in 2D and for a rectangular structured grid, there are 4 grids, namely :

- a cell-centered grid, S, dedicated to the scalars , and

- different face-centered grids corresponding to momentum (or vector) components. In this case, 2 grids exist : for and for .

- a grid dedicated to the boundary conditions and that minimises the number of interpolations.

Finite differences

The finite differences method was discovered by Taylor Brook. Adapted by Lagrange to the resolution of differential equations, it makes it possible to interpolate or differentiate a discrete function, with a chosen accuracy.

is the step.

Actually, the accuracy of the scheme depends on the Stencil, which means it depends on the number of points taken into account in order to calculate the differential. A Stencil of 2 means that 2 points of each side are considered.

Taking into account the chosen numerical scheme and a symmetrical Stencil, the differential of a function at the point n is written :

avec et

N : value of the Stencil. : balancing ratio, giving more importance to the points near the considered one.

Formulae for the hybrid-colocated-staggered scheme :

- First point

- General case :

Interpolation and differentiation operations

In order to calculate the various variables, the code uses interpolations and differentiations. Multidimensional arrangements are considered as combinations of monodimensional arrangements, which means that operators defined in 1D will be also used in 3D to do interpolation and differentiation operations.

- The interpolation is specially used in order to change the grid (for example, needing the value of the scalar to obtain the value of a component). There are 2 interpolations possible :

- changing from the scalar grid to a momentum component (or ) one, using the subroutine Interp1,

- changing from a momentum component (or ) grid to the scalar one, using the subroutine Interp2.

- The differentiation is used to differentiate, but it also changes the grid.

- Diff1 differentiates and changes the grid from the scalar one to a momentum component one,

- Diff2 differentiates and changes a momentum component grid to the scalar one.

Notes et Références

- ↑ Using staggered grids with characteristic boundary conditions when solving compressible reactive Navier-Stokes equations - E. Albin, Y. D'Angelo and L. Vervisch

- ↑ Minimal storage time-advancement schemes for spectral methods - A. Wray

- ↑ Van Kalmthout, p.50 Theoretical and Numerical combustion - T. Poinsot and D. Veynante, edition of 2001

- ↑ http://www.idris.fr/su/Scalaire/babel/passage\_production.html